Common Data Integration Techniques and Technologies in 2025

Issues in combining data from multiple different sources have always remained. Hence, scientists at the University of Minnesota designed the first system to consolidate data in 1991. This system used the ETL approach that extracts, transforms, and loads data from multiple systems and sources into a unified view.

This blog will discuss the various data integration techniques and technologies.

11 Types of Data Integration Techniques

The process of consolidating data from multiple applications and creating a unified view is known as data integration. Businesses use different data integration tools with a variety of applications, technologies, and techniques to integrate data from disparate sources and create a single version of the truth (SSOT).

Data integration techniques, also called data integration technologies, are simply the different strategies, approaches, and tools used for combining data from multiple sources in a single destination. Data integration technologies has evolved at a rapid pace over the last decade. Initially, extract, transform, load (ETL) was the only available data integration technique, used for batch processing. However, businesses continued to add more sources to their data ecosystems and the need for real-time data integration techniques arose. Hence, new advancements and technologies were introduced.

Common data integration techniques include:

- ETL (extract, transform, load)

- ELT (extract, load, transform)

- CDC (change data capture)

- Data Replication

- API-Based Integration

- Data Consolidation

- Data Federation

- Middleware Integration

- Data Propagation

- Enterprise Information Integration (EII)

- Enterprise Data Replication (EDR)

Different data integration approaches cater to data from various internal and external sources. This is achieved using one of the types of data integration techniques. The approach depends on the disparity, complexity, and number of data sources involved. Let’s look at these data integration techniques individually and see how they can help improve business processes.

Extract, Transform, Load (ETL)

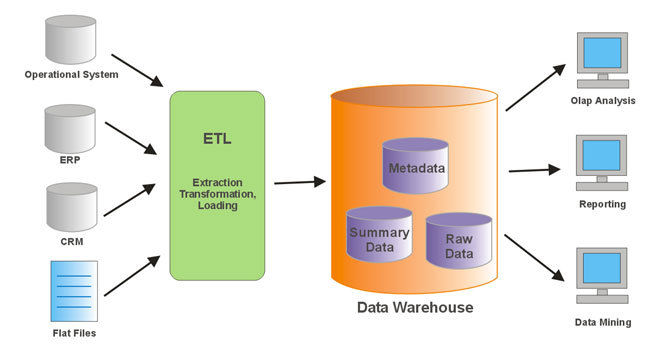

The best-known data integration technology, ETL or Extract, Transform, Load, is a process that involves extracting data from a source system and loading it to a target destination after transformation. ETL has long been the standard way of integrating data. Organizations use ETL tools to extract, transform, and load their data.

The primary use of ETL is to consolidate data for BI and analytics. It can be conducted in batches or near-real-time. The ETL process involves extracting data from a database, ERP solution, cloud application, or file system and transferring it to another database or data repository. The transformations performed on the data vary depending on the specific data management use case. However, common transformations include data cleansing, aggregation, filter, joins, and reconciliation.

Extract, Load, Transform (ELT)

ELT (Extract, Load, Transform) is another data integration approach, closely related to ETL. While ETL focuses on extracting data from source systems, transforming it, and then loading it into a target system (such as a data warehouse), ELT reverses the sequence of the last two steps, i.e., the data is loaded before it is transformed.

ELT is particularly associated with big data and data warehousing scenarios where the target system has the processing power to handle large-scale transformations. This approach leverages the capabilities of modern data warehouses and big data platforms, allowing transformations to take place closer to the storage, often in a parallel and distributed fashion.

Popular cloud-based data warehouses, such as Amazon Redshift, Google BigQuery, and Snowflake, often support ELT processes, enabling organizations to harness the benefits of scalable and parallel processing for data transformation within the data storage environment.

Change Data Capture (CDC)

There are instances where organizations need to stay updated with any changes made to the source data without having to recopy all of it. This is achieved through change data capture (CDC), a common data integration technique. CDC identifies and captures only the changes in source data such as inserts, updates, or deletes, leaving behind the rest of the data in its original state. Organizations typically use CDC when they want to:

- Keep systems synchronized in real time or near-real time, such as syncing an operational database with a data warehouse.

- Reduce load on databases by avoiding full table scans or bulk extracts.

- Support event-driven architectures, such as triggering business actions when certain data changes.

- Enable incremental ETL pipelines for faster and more efficient data movement.

Data Replication

Data replication is a an approach to data integration used to copy data from one system to another, typically to maintain consistency across systems or to improve availability. It involves duplicating data from a source to a target, either continuously or at scheduled intervals. Replication can be unidirectional or bidirectional, depending on the requirements. Organizations use data replication when they need to:

- Ensure data consistency, especially across geographically distributed systems

- Maintain real-time or near real-time copies for high availability and disaster recovery

- Improve performance by distributing data closer to end users or applications

- Support parallel access to the same data across multiple platforms

API-Based Integration

As the name suggests, API-based integration is a method that uses application programming interfaces (APIs) to enable systems to communicate and exchange data. APIs provide standardized endpoints for accessing data or services between applications. This approach is often used in environments with microservices, SaaS platforms, or loosely coupled systems. Organizations adopt API-based integration when they want to:

- Connect modern applications that expose functionality through REST or SOAP APIs

- Enable real-time or near-real-time data exchange between systems

- Reduce dependencies on traditional ETL processes in distributed architectures

- Support modular and scalable system design with flexible integration points

Data Consolidation

As the name suggests, data consolidation combines data from different sources to create a centralized data repository or data store. Data analysts can use this repository for various purposes, such as reporting and data analysis. In addition, it can also perform as a data source for downstream applications. Data latency is a key factor differentiating data consolidation from other data integration techniques. The shorter the latency period, the fresher data is available for business intelligence and analysis in the data store.

Generally speaking, there is usually some level of latency between the time updates occur with the data stored in source systems and the time those updates reflect in the data warehouse or data source. This latency can vary depending on the data integration technologies in use and the business’s specific needs. However, with advancements in integrated big data technologies, it is possible to consolidate data and transfer changes to the destination in near real-time or real-time.

Data Federation

Data federation, also known as federated data access or federated data integration, consolidates data and simplifies access for consuming users and front-end applications. In this technique, distributed data with different models are integrated into a virtual database with a unified data model. There is no physical data movement happening behind a federated virtual database. Instead, data abstraction creates a uniform user interface for data access and retrieval.

As a result, whenever a user or an application queries the federated virtual database, the query is decomposed and sent to the relevant underlying data source. In other words, the data is served on an on-demand basis in data federation, unlike the real-time data integration approach, where data is integrated to build a separate centralized data store.

Middleware Integration

Middleware integration techniques refer to the methods used to facilitate smooth data exchange between different systems. These software act as a bridge between different systems and applications, allowing them to not only communicate and share information but also perform together as a cohesive unit. For example, you can connect your old on-premises database with a modern cloud data warehouse using middleware integration and securely move data to the cloud.

Common techniques include message-oriented middleware (MOM), service-oriented architecture (SOA), enterprise service bus (ESB), and application programming interfaces (APIs). Middleware integration enables seamless communication, data transformation, and integration between disparate systems.

Data Propagation

Data propagation is another data integration technique. It involves transferring data from an enterprise data warehouse to different data marts after the required transformations. Since data continue to update in the data warehouse, changes are propagated to the source data mart synchronously or asynchronously. The two common data integration technologies for data propagation include enterprise application integration (EAI) and enterprise data replication (EDR).

Enterprise Information Integration (EII)

Enterprise Information Integration (EII) is a data integration strategy that delivers curated datasets on demand. Also considered a type of data federation technology, EII involves the creation of a virtual layer or a business view of underlying data sources. This layer shields the consuming applications and business users from the complexities of connecting to multiple source systems having different formats, interfaces, and semantics.

In other words, EII is a data integration approach that allows developers and business users to treat a range of data sources as if they were one database. This technology enables them to present incoming data in new ways. Unlike batch ETL, EII can easily handle real-time integration and delivery use cases, allowing business users to consume fresh data for data analysis and reporting.

Enterprise Data Replication (EDR)

Used as a data propagation technique, Enterprise Data Replication (EDR) is a real-time data consolidation method. It involves moving data from one storage system to another. In its simplest form, EDR consists of moving a dataset from one database to another with the same schema. Recently, the process has become more complex, involving different source and target databases. Data is also being replicated at regular intervals, in real-time, or sporadically, depending on the needs of the enterprise. EDR is different from ETL in that it does not involve any data transformation or manipulation.

In addition to these key data integration technologies, enterprises with complex data management architectures also use Enterprise Application Integration (EAI) and other event-based and real-time technologies to keep up with the data needs of their business users.

6 Data Integration Technologies

To implement the techniques discussed above, you need specialized tools, software, platforms, or infrastructure. These are called data integration technologies and describe what you use to perform the integration. Common data integration technologies include:

ETL Tools

These are software or platforms used primarily for use cases around data warehousing. ETL tools simplify and automate the process of data extraction, transformation, and loading. These are mostly standalone tools that specifically focus on the ETL aspect of data integration. Popular ETL tools include Astera Data Pipeline, Talend, AWS Glue, and Informatica.

ELT Tools

Like ETL tools, ELT tools also automate the process of data movement. Since the data is loaded before any transformation occurs, ELT platforms work best for use cases where large heaps of unstructured data needs to be dumped into a data warehouse or data lake quickly.

Unified Data Integration Platforms

These are full-fledged integration solutions that automate the entire data integration process from end-to-end. Instead of focusing on a particular data integration techniques, these platforms stand apart by offering a host of different ways to integrate data. For example, Astera’s integration platform enables organizations to unify data using techniques like ETL, ELT, CDC, APIs, etc. Many data integration tools can connect to both on-premises and cloud environments, which is a prerequisite for most data migration use cases.

Data Pipelines

As the name suggests, data pipeline builders are specialized tools designed to move data from source systems to a target system. These tools are particularly useful for business users as they empower them to build data pipelines without having to write code. Astera Data Pipeline, Apache Airflow, Informatica, Azure Data Factory are some of the examples of data pipeline tools.

Data Warehouse Builders

Some data warehouse platforms allow organizations to design their own data warehouse and populate it with company-wide data. A popular example is Astera Data Warehouse Builder that uses the metadata-driven approach to create the data warehouse, accelerating development and delivering analysis-ready data.

API Integration Tools

API integration tools use APIs to integrate data and functionality across systems. These platforms sit at the heart of modern, loosely coupled architectures, especially in cloud-native, microservices, and hybrid environments. API integration tools serve both data integration and application interoperability purposes.

Which Data Integration Technique is Right for Your Business?

The choice depends on the specific needs and goals of your business. The optimal technique is determined by factors such as data volume, latency requirements, infrastructure, and business objectives. For example, if you require centralized analytics and reporting, ETL might be suitable. On the other hand, if you want to leverage the processing power of a data warehouse, ELT could be a good fit. For real-time access without centralizing data, data federation or enterprise information integration might be the right choice.

| Data Integration Techniques | Best For | Pros | Cons |

|---|---|---|---|

| ETL (Extract, Transform, Load) | Batch processing where data needs to be cleaned and structured before loading into a data warehouse | Strong data quality control; Good for complex transformations; Established tooling | Slower for large datasets; Less suited to real-time use cases |

| ELT (Extract, Load, Transform) | Modern cloud platforms with scalable processing power for in-warehouse transformations | Faster data ingestion; Leverages warehouse computing; Scales easily | Requires robust target system; Harder to manage transformation logic |

| CDC (Change Data Capture) | Real-time or near-real-time data syncing with minimal system load | Efficient; Timely updates; Low source impact | Complex to configure; Dependent on log retention and schema change handling |

| Data Replication | Copying data between systems for redundancy, backup, or high availability. | Fast data transfer; Works across geographies; Good for operational continuity | No data transformation; High network and storage usage |

| API-Based Integration | Integrating applications and data sources through RESTful or event-based APIs. | Real-time integration. Fine-grained access. Flexible with SaaS systems. | Requires API availability. Complex orchestration and error handling. |

| Data Consolidation | Combining data from multiple sources into a central store for reporting or analysis | Centralizes data for governance and analytics; Improves consistency | Often involves latency; Can become a bottleneck if not optimized |

| Data Federation | Virtual access to data from multiple sources without physically moving it | No data duplication; Fast setup; Real-time query capability | Performance depends on source systems; Limited transformation options |

| Middleware Integration | Connecting heterogeneous applications and systems in real time | Supports synchronous communication; Reusable integration logic | High complexity; Needs strong governance and monitoring |

| Data Propagation | Pushing data changes from one system to another using messaging or replication | Real-time replication; Good for operational integration | Risk of data conflicts; Doesn’t centralize data for analytics |

| Enterprise Information Integration (EII) | Providing a unified view of data across systems via abstraction layers | No data movement; Delivers real-time access; Flexible source handling | Query performance challenges; Complex security and metadata management |

| Enterprise Data Replication (EDR) | Replicating data across systems for backup, high availability, or synchronization | Ensures availability; Good for disaster recovery and distributed environments | High storage cost; May lack transformation capabilities |

Automate Data Integration With Astera Data Pipeline

Astera Data Pipeline is an AI-driven data integration platform that enables users to ingest, transform, cleanse, and consolidate enterprise-wide data into any target system, whether deployed on-premises or in the cloud. It supports modern integration techniques to ensure efficiency, scalability, and data consistency. If you’re looking to implement an automated data integration platform, here’s how Astera Data Pipeline can help you:

- 100% no-code integration with a simple yet powerful user interface, enabling everyone to build and maintain their own data pipelines

- Integrate data using various techniques. ETL, ELT, CDC, APIs, Astera offers it all

- Ingest data from 100+ sources using built-in connectors or build your own custom API (CAPI) connector for added flexibility

- Easily handle high volumes of data at peak performance

- Automate data processing tasks and orchestrate your integration projects seamlessly

- Format your data as required using built-in data transformations and functions

- Deliver analysis-ready data to your business intelligence platform for faster insights

And much more, all without writing a single line of code.

Take the next step by downloading a free trial. Alternatively, you can contact us to discuss your use case and see how Astera can help you with your data integration approach.