If you’re on the lookout for a data pipeline tool that best satisfies the needs of your organization, then look no further. This article serves as a guide to data pipeline tools, explaining what they are, their types, and the challenges they help businesses with. It also provides a curated list of the best data pipeline tools and the factors to consider when selecting one.

TL; DR? Here’s the list of the best data pipeline tools to consider in 2024:

- Astera

- Apache Airflow

- Apache Kafka

- AWS Glue

- Google Cloud Dataflow

- Microsoft Azure Data Factory

- Informatica PowerCenter

- Talend Data Integration

- Matillion

- StreamSets Data Collector

What are data pipeline tools?

Data pipeline tools are software applications and solutions that enable you to build data pipelines using a graphical user interface (GUI). The terms “data pipeline tools,” “data pipeline software,” “data pipeline platform,” or “data pipeline builder” all mean the same thing and are used interchangeably in the data management space. These solutions simplify the process of extracting data from various sources, transforming it if and as needed, and loading it into a centralized repository for analysis.

While the target system is usually a data warehouse—whether on-premises or cloud-based—organizations are increasingly turning to data lakes to benefit from their ability to store vast amounts of all types of data. The added flexibility enables you to uncover hidden insights that are not readily apparent in a traditional data warehouse, allowing for a more comprehensive data analysis.

Data pipeline tools offer the necessary infrastructure to enable workflow automation while ensuring data quality and availability. A modern data pipeline tool provides all the features and capabilities that cater to the needs of both data professionals and business users. With the rising need for compliance with regulatory requirements, these tools now come with built-in data governance features, such as data lineage, data catalog, and data classification.

The goal with data pipeline tools is to reduce the need for manual intervention and orchestrate the seamless movement of data from source to destination for accurate analysis and decision-making.

Types of data pipeline tools

Data pipeline tools can be categorized into various types based on their functionality and use cases. Here are some common types:

Real-time and batch processing data pipeline tools

Real-time data pipeline tools are designed to process and analyze data as it is generated. These tools provide immediate insights and responses, which makes them crucial for applications that require up-to-the-minute information. Batch data pipeline tools, on the other hand, process data in fixed-size chunks or batches. These tools are suitable for scenarios where immediate analysis is not critical.

|

Real-time data pipeline tools |

Batch data pipeline tools |

| Processing |

Deliver low latency for quick analysis |

Process data in fixed-size batches |

| Use Cases |

Immediate insights and responses |

Non-critical analysis scenarios |

| Implementation |

Often require more resources |

Simpler to implement and maintain |

Open-source and proprietary data pipeline tools

Popular open-source data pipeline tools, such as Apache NiFi or Apache Airflow, have gained widespread adoption due to their flexibility, community support, and the ability for users to tailor them to fit diverse data processing requirements.

|

Open-source data pipeline tools |

Proprietary data pipeline tools |

| Development |

Developed collaboratively by a community |

Developed and owned by for-profit companies |

| Accessibility |

Freely accessible source code |

Generally not free for commercial use. May have freemium versions |

| Support |

Lacks official support but are backed by community |

Usually offer dedicated support; varies by company |

| Open-source data pipeline tools have a steep learning curve, making it challenging to use for non-technical and business users. On the other hand, proprietary data pipeline tools are generally easy to use and simplify the process, even for business users. |

On-premises and cloud data pipeline tools

On-premises tools operate within the organization’s infrastructure, providing a heightened level of control and security over data processing. On the other hand, cloud data pipeline tools operate on infrastructure provided by third-party cloud service providers, offering organizations a flexible and scalable solution for managing their data workflows.

|

On-premises data pipeline tools |

Cloud data pipeline tools |

| Infrastructure |

Operate within the organization’s infrastructure |

Operate on third-party cloud infrastructure |

| Control and Security |

Provide a high level of control and security |

The focus is more on flexibility and scalability |

| Operational Responsibilities |

Require managing and maintaining the entire infrastructure |

Managed services for tasks like data storage, compute resources, and security |

| Due to compliance requirements, industries like finance and healthcare favor on-premises data pipeline tools. They provide autonomy but require managing the infrastructure. In contrast, cloud data pipeline tools operate on third-party infrastructure, which offers flexibility and managed services to reduce operational burdens. |

The 10 best data pipeline tools in 2024

Let’s look at some of the best data pipeline tools of 2024 in detail:

Astera

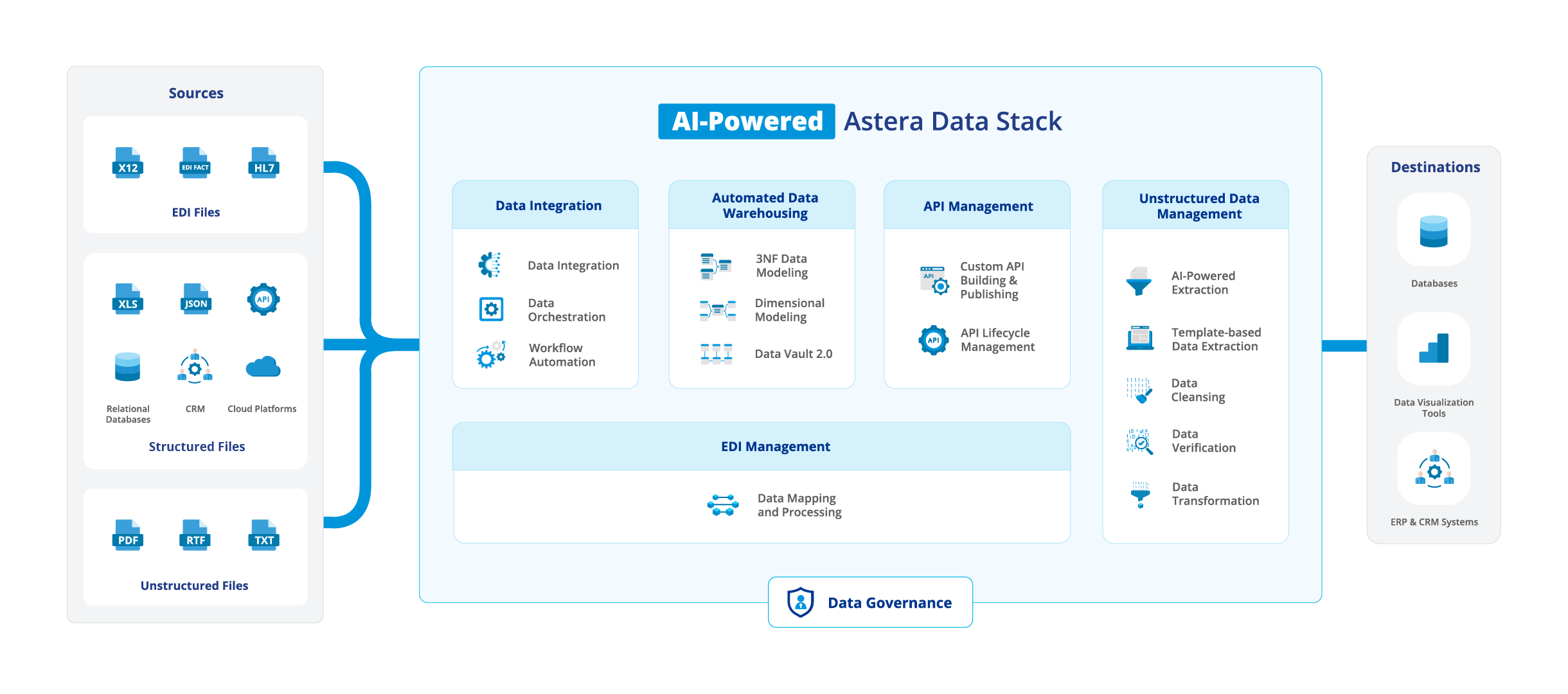

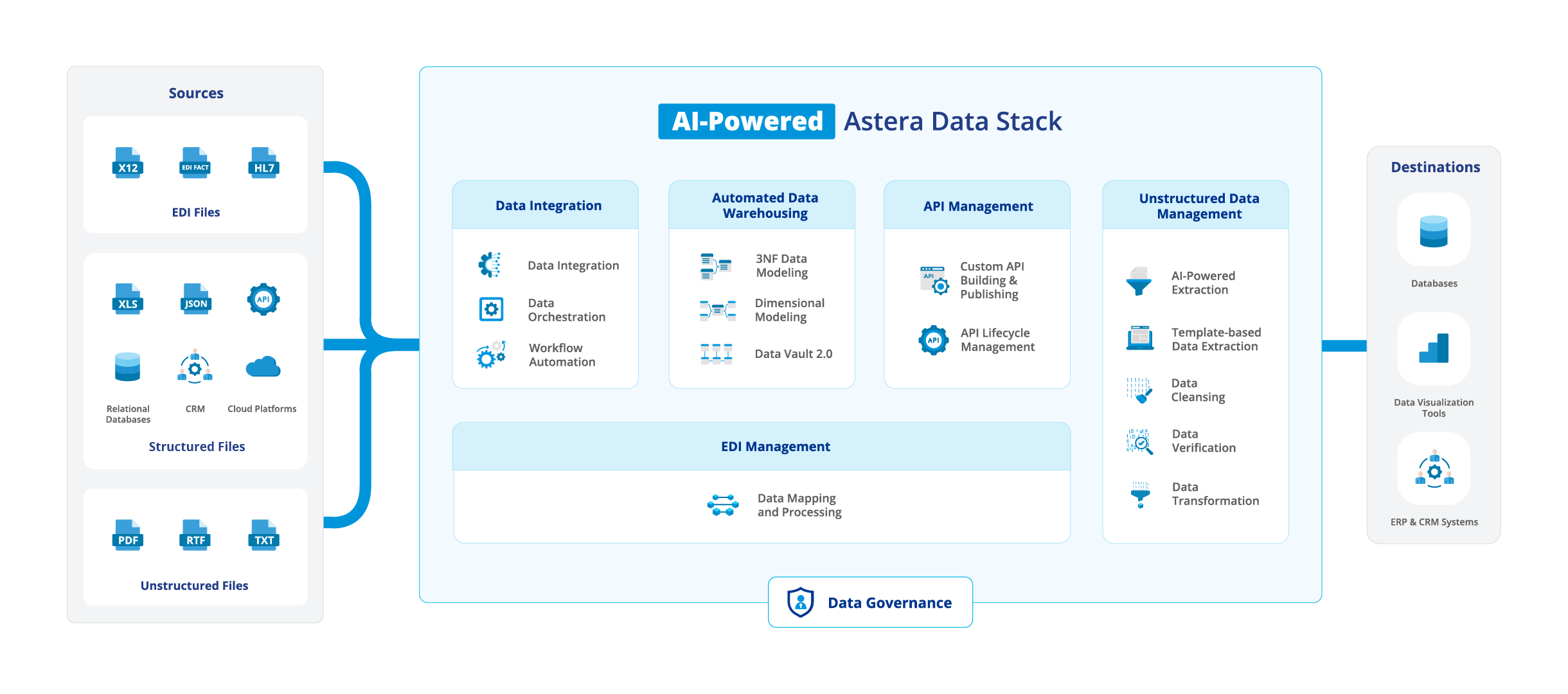

Astera is a comprehensive, 100% no-code data management platform with powerful capabilities to build data pipelines. It offers a powerful yet simple to use visual UI along with built-in capabilities for connecting to a wide range of sources and destinations, data transformation and preparation, workflow automation, process orchestration, data governance, and the ability to handle big data.

It’s a unified solution that simplifies:

Astera stands out in the market for several reasons. It offers a comprehensive and powerful data integration tool, which, with its wide range of features, enables users to design, deploy, and monitor data pipelines efficiently. The visual design interface simplifies the pipeline creation process, making it accessible to both technical and non-technical users—it’s easy to navigate and do hours of work in mere seconds.

Astera provides end-to-end visibility and control over data workflows and enables users to track pipeline performance, identify bottlenecks, and troubleshoot issues before they escalate. The platform also offers comprehensive data transformation capabilities, empowering users to cleanse, enrich, and manipulate data within the pipeline itself.

Additionally, Astera offers advanced scheduling and dependency management features, ensuring seamless execution of complex dataflows and workflows. Astera also emphasizes collaboration and teamwork. The platform supports role-based access control, allowing multiple users to work simultaneously on pipeline development and management.

Apache Airflow

Apache Airflow is an open-source data pipeline tool for creating, scheduling, and monitoring complex dataflows and workflows, offering flexibility and extensive integrations.

Pros

- A flexible and scalable data pipeline solution

- Active community helpful in resolving common challenges

- Ability to monitor tasks and set alerts

Cons

- Steep learning curve makes it difficult to learn and use effectively

- Being an open-source tool means users will need to rely on in-house expertise for their dataflows

- Scheduling feature is rather slow, especially when scheduling multiple tasks

Apache Kafka

Apache Kafka is another open-source data pipeline software solution. Users can ingest and process data in real-time. It provides a distributed messaging system that allows producers to publish messages to topics and consumers (downstream processing systems, databases, or other applications) to subscribe to these topics and process the messages in real-time.

Pros

- Real-time data processing

- Handles high volumes of data with horizontal scaling

- Offers fault-tolerant replication for mission-critical data

Cons

- Steep learning curve makes it difficult to learn and use effectively, particularly when configuring advanced features such as replication, partitioning, and security.

- For simple scenarios or low data volumes, Kafka’s capabilities are an overkill

- While Kafka itself is open-source, deploying and managing a Kafka cluster involves costs associated with infrastructure, storage, and operational resources

AWS Glue

AWS Glue is a fully managed ETL service on Amazon Web Services. The data pipeline tool offers integration with various AWS services and supporting batch and streaming processing.

Pros

- The biggest advantage of using AWS Glue as a data pipeline tool is that it offers tight integration within the AWS ecosystem.

- Offers built-in features for data quality management

- Can be cost-effective for basic ETL processes

Cons

- Users need a good understanding of Apache Spark to fully utilize AWS Glue, especially when it comes to data transformation

- While it offers integration with external data sources, managing and controlling them remains the responsibility of the user

- Primarily caters to batch-processing use cases and has limitations for handling near real-time data processing.

Google Cloud Dataflow

A serverless data processing service by Google Cloud that offers batch and stream processing with high availability and fault tolerance.

Pros

- Offers both, batch and stream processing

- Ability to move large amounts of data quickly

- Offers high observability into the ETL process

Cons

- Requires considerable development effort compared to other no-code data pipeline tools

- Users on review platforms report that it’s difficult to learn and use, and the documentation is lacking

- Debugging an issue in a pipeline can be cumbersome

Microsoft Azure Data Factory

Azure Data Factory is an ETL and data integration service offered by Microsoft. It facilitates orchestration of data workflows across diverse sources.

Pros

- Like other data pipeline tools, it offers a no-code environment

- Being Microsoft’s own service means tighter integration within the ecosystem

- Azure Data Factory offers a single monitoring dashboard for a holistic view of data pipelines

Cons

- The visual interface quickly becomes cluttered as the workflows become more complex

- Does not offer native support for change data capture from some of the most common databases

- The error messages are not descriptive and lack context, making it hard to troubleshoot

Informatica PowerCenter

Informatica PowerCenter is a data pipeline software with the ability to extract, transform, and load data from various sources.

Pros

- Offers features to maintain data quality

- Ability to handle large amounts of data

- Like other data pipeline software, it provides built-in connectors for different data sources and destinations

Cons

- Steep learning curve, even for beginners with a technical background due to a largely confusing and inconsistent mix of services and interfaces

- Handling large amounts of data is resource-intensive with Informatica

- Another problem with Informatica is the cost, which is largely prohibitive, especially for small businesses

Read more about Informatica alternatives.

Talend Data Integration

Talend Data Integration is an enterprise data integration tool. It enables users to extract, transform, and load data into a data warehouse or data lake.

Pros

- Handles large amounts of data

- Ability to integrate on-premises and cloud systems

- Can integrate with different business intelligence (BI) platforms

Cons

- Requires considerably high processing power, which means it’s not as efficient

- Joining tables from different schemas is not straightforward during ETL

- Users frequently report that Talend’s documentation is not comprehensive enough

Read more about Talend alternatives.

Matillion

An ETL platform that allows data teams to extract, move, and transform data. While it allows users to orchestrate workflows, it’s mostly focused around integrating data only.

Pros

- Offers a graphical user interface

- Wide range of built-in transformations

- Generally easy to use compared to Informatica and Talend

Cons

- Git integration is not as robust as Astera’s

- While it offers built-in connectors, setting them up is not straightforward in some cases

- Doesn’t offer advanced data quality features

Read more about Matillion alternatives.

StreamSets Data Collector

A data ingestion platform focused on real-time data pipelines with monitoring and troubleshooting capabilities.

Pros

- Ability to schedule jobs

- Features a graphical UI

- Supports both batch and stream processing

Cons

- Understanding and filtering the logs is not a straightforward task

- Processing with JDBC is significantly slow

- Debugging takes up a considerable amount of time

How to select a data pipeline tool?

Selecting the right data pipeline tool is essential for organizations to effectively manage and process their data. There are several factors that weigh in:

- Scalability: Assess whether the tool can handle your current and future data volume and velocity requirements. Look for horizontal and vertical scalability to accommodate expanding data needs.

- Data sources and targets: Ensure the data pipeline tool supports the data sources and destinations relevant to your organization, including databases, file formats, cloud services, data warehouses, data lakes, and APIs.

- Data transformation and integration: Evaluate the tool’s capabilities for data cleaning, transformation, and integration. Look for features that simplify complex data mapping, merging, and handling different data types.

- Real-time vs. batch processing: Determine if the data pipeline tool supports your preferred data processing mode. Assess whether real-time streaming or batch processing is suitable for your pipeline needs.

- Ease of use and learning curve: Consider the tool’s user interface, configuration simplicity, and usability. Look for intuitive interfaces, visual workflows, and drag-and-drop functionalities to streamline pipeline development and management.

- Monitoring and alerting: Check if the data pipeline tool provides comprehensive monitoring and alerting features. It should offer visibility into pipeline health, performance, and status, including logs, metrics, error handling, and notifications for efficient troubleshooting.

- Security and compliance: Ensure the tool provides robust security measures such as encryption, access controls, and compliance with relevant regulations (e.g., GDPR, HIPAA) when handling sensitive or regulated data.

- Integration with your existing infrastructure: Evaluate how well the data pipeline tool integrates with your current infrastructure, including data storage systems and analytics platforms. Seamless integration can save time and effort in pipeline setup and maintenance.

- Support and documentation: Assess the level of support and availability of documentation from the tool’s vendor. Look for comprehensive documentation, user forums, and responsive support channels to assist with troubleshooting.

- Total cost of ownership (TCO): Consider the overall cost of the data pipeline tool, including licensing, maintenance, and additional resources required for implementation and support. Evaluate if the tool provides good value based on its features and capabilities.

What business challenges to data pipeline tools overcome?

Businesses rely on automation and advanced technologies, such as artificial intelligence (AI) and machine learning (ML), to manage and use extremely high volumes of data to their advantage. Handling high volume data is just one of many challenges data pipeline tools enable businesses to overcome—these tools address a spectrum of challenges that organizations face in navigating the complexities of data processing.

Data Integration and Consolidation

- Challenge: Businesses often have data scattered across various systems and sources, making it challenging to integrate and consolidate for a unified view.

- Solution: Data pipeline tools facilitate the extraction, transformation, and loading processes, enabling seamless integration and consolidation of data from diverse sources into a central repository.

Real-Time Decision-Making

- Challenge: Traditional batch processing methods result in delayed insights, hindering real-time decision-making.

- Solution: Real-time data processing enables businesses to analyze and act on data as it is generated, supporting timely decision-making.

Data Quality and Consistency

- Challenge: Inaccuracies, inconsistencies, and poor data quality can lead to unreliable insights and decision-making.

- Solution: Modern data pipeline tools, like Astera, offer data quality features, allowing businesses to clean, validate, and enhance data, ensuring accuracy and consistency.

Scalability and Performance

- Challenge: Handling growing volumes of data can strain traditional systems, leading to performance issues and scalability challenges.

- Solution: Cloud-based data pipeline tools provide scalable infrastructure, allowing businesses to dynamically adjust resources based on workload demands, ensuring optimal performance.

Operational Efficiency

- Challenge: Manually managing and orchestrating complex data workflows can be time-consuming and prone to error.

- Solution: Workflow orchestration tools automate and streamline data processing tasks, improving operational efficiency, and reducing the risk of human errors.

The bottom line

Data pipeline tools have become an essential component of the modern data stack. As the amount of data continues to rise, these tools become even more important for managing the flow of information from ever-growing sources.

However, no two tools are created equal. Choosing the right tool depends on several factors. Some tools excel at handling real-time data streams, while others are better suited for batch processing of large datasets. Similarly, some solutions offer user-friendly interfaces with drag-and-drop functionalities, while others require coding experience for customization. Ultimately, the best data pipeline tool will be the one that satisfies the business requirements.

Authors:

Khurram Haider

Khurram Haider